Latest 10 recent news (see index)

March 14, 2024

March 2024 Image Release (and Raspberry Pi 5 support)

We’re pleased to announce that the 20240314 image set has been promoted to current and is now generally available.

You can find the new images on our downloads page and on our many mirrors.

Some highlights of this release:

- A keymap selector is now shown in LightDM on XFCE images (@classabbyamp in #354)

- The chrony NTP daemon is now enabled by default in live images

(@classabbyamp in

abbd636) - Raspberry Pi images can now be installed on non-SD card storage without manual configuration on models that support booting from USB or NVMe (@classabbyamp in #361)

- Raspberry Pi images now default to a

/bootpartition of 256MiB instead of 64MiB (@classabbyamp in #368)

rpi-aarch64* PLATFORMFSes and images now support the Raspberry Pi 5.

After installation, the kernel can be

switched

to the Raspberry Pi 5-specific rpi5-kernel.

You may verify the authenticity of the images by following the instructions on the downloads page, and using the following minisign key information:

untrusted comment: minisign public key A3FCFCCA9D356F86

RWSGbzWdyvz8o4nrhY1nbmHLF6QiFH/AQXs1mS/0X+t1x3WwUA16hdc/

January 22, 2024

Changes to xbps-src Masterdir Creation and Use

In an effort to simplify the usage of xbps-src,

there has been a small change to how masterdirs (the containers xbps-src uses

to build packages) are created and used.

The default masterdir is now called masterdir-<arch>, except when masterdir

already exists or when using xbps-src in a container (where it’s still masterdir).

Creation

When creating a masterdir for an alternate architecture or libc, the previous syntax was:

./xbps-src -m <name> binary-bootstrap <arch>

Now, the <arch> should be specified using the new -A (host architecture)

flag:

./xbps-src -A <arch> binary-bootstrap

This will create a new masterdir called masterdir-<arch> in the root of your

void-packages repository checkout.

Arbitrarily-named masterdirs can still be created with -m <name>.

Usage

Instead of specifying the alternative masterdir directly, you can now use the

-A (host architecture) flag to use the masterdir-<arch> masterdir:

./xbps-src -A <arch> pkg <pkgname>

Arbitrarily-named masterdirs can still be used with -m <name>.

January 22, 2024

Welcome New Contributors!

The Void project is pleased to welcome aboard 2 new members.

Joining us to work on packages are @oreo639 and @cinerea0.

Interested in seeing your name in a future update here? Read our Contributing Page and find a place to help out! New members are invited from the community of contributors.

January 04, 2024

glibc 2.38 Update Issues and Solutions

With the update to glibc 2.38, libcrypt.so.1 is no longer provided by

glibc.

Libcrypt is an important library for several core system packages that use

cryptographic functions, including pam. The library has changed versions, and

the legacy version is still available for precompiled or proprietary

applications. The new version is available on Void as libxcrypt and the legacy

version is libxcrypt-compat.

With this change, some kinds of partial upgrades can leave PAM unable to

function. This breaks tools like sudo, doas, and su, as well as breaking

authentication to your system. Symptoms include messages like “PAM

authentication error: Module is unknown”. If this has happened to you, you can

either:

- add

init=/bin/shto your kernel command-line in the bootloader and downgrade glibc, - or mount the system’s root partition in a live environment,

chroot

into it, and install

libxcrypt-compat

Either of these steps should allow you to access your system as normal and run a full update.

To ensure the disastrous partial upgrade (described above) cannot happen,

glibc-2.38_3 now depends on libxcrypt-compat. With this change, it is safe

to perform partial upgrades that include glibc 2.38.

October 24, 2023

Changes to Repository Sync

Void is a complex system, and over time we make changes to reduce this

complexity, or shift it to easier to manage components. Recently

through the fantastic work of one of our maintainers classabbyamp

our repository sync system has been dramatically improved.

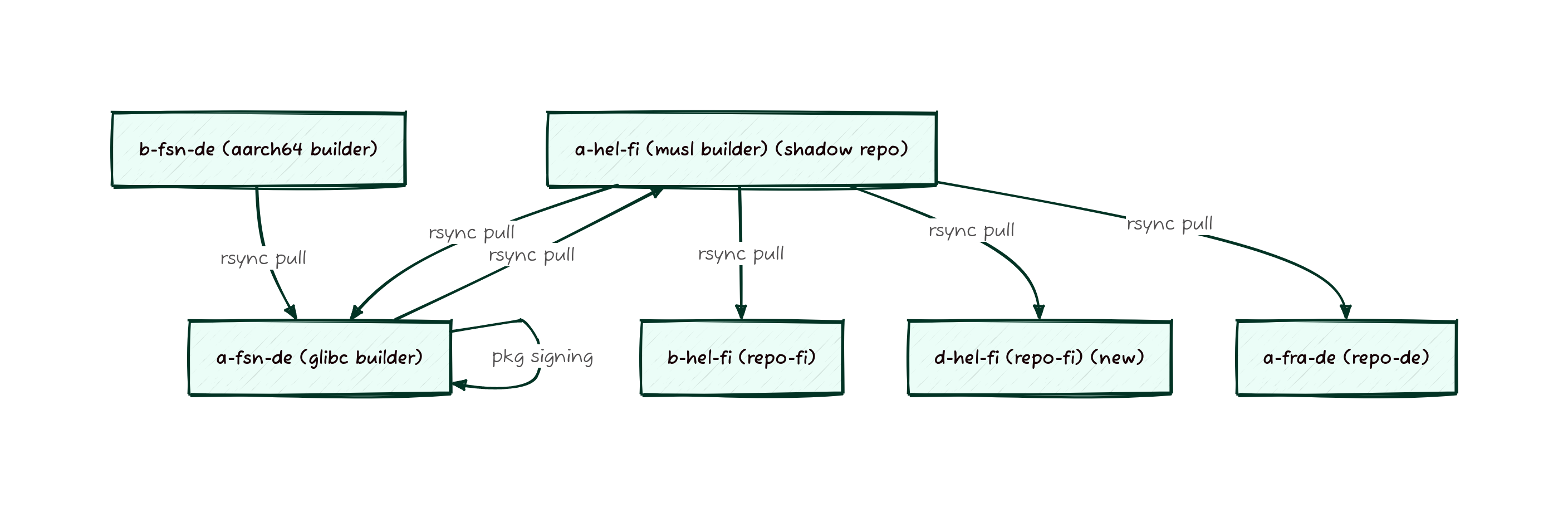

Previously our system was based on a series of host managed rsyncs running on either snooze or cron based timers. These syncs would push files to a central location to be signed and then distributed. This central location is sometimes referred to as the “shadow repo” since its not directly available to end users to synchronize from, and we don’t usually allow anyone outside Void to have access to it.

As you might have noticed from the Fastly Overview the packages take a long path from builders to repos. What is not obvious from the graph shown is that the shadow repo previously lived on the musl builder, meaning that packages would get built there, copied to the glibc builder, then copied back to the musl builder and finally copied to a mirror. So many copies! To streamline this process, the shadow mirror is now just the glibc server, since that’s where the packages have to wind up for architectural reasons anyway. This means we were able to cut out 2 rsyncs and reclaim a large amount of space on the musl builder, making the entire process less fragile and more streamlined.

But just removing rsyncs isn’t all that was done. To improve the time it takes for packages to make it to users, we’ve also switched the builders from using a time based sync to using lsyncd to take more active management of the synchronization process. In addition to moving to a more sustainable sync process, the entire process was moved up into our Nomad managed environment. Nomad allows us to more easily update services, monitor them for long term trends, and to make it clearer where services are deployed.

In addition to fork-lifting the sync processes, we also forklifted void-updates, xlocate, xq-api (package search), and the generation of the docs-site into Nomad. These changes represent some of the very last services that were not part of our modernized container orchestrated infrastructure.

Visually, this is what the difference looks like. Here’s before:

And here’s what the sync looks like now, note that there aren’t any cycles for syncs now:

If you run a downstream mirror we need your help! If your mirror

has existed for long enough, its possible that you were still

synchronizing from alpha.de.repo.voidlinux.org, which has been a dead

servername for several years now. Since moving around sync traffic is

key to our ability to keep the lights on, we’ve provisioned a new

dedicated DNS record for mirrors to talk to. The new

repo-sync.voidlinux.org is the preferred origin point for all sync

traffic and using it means that we can transparently move the sync

origin during maintenance rather than causing an rsync hang on your

sync job. Please check where you’re mirroring from and update

accordingly.

October 07, 2023

Changes to Python 3 and pip on Void

Happy Pythonmas! It’s October, which means it’s Python 3 update season. This year, along with the usual large set of updates for Python packages, a safety feature for pip, the Python package manager, has been activated. To ensure that Python packages installed via XBPS and those installed via pip don’t interfere with one another, the system-wide Python environment has been marked as “externally managed”.

If you try to use pip3 or pip3 --user outside of a Python virtual environment,

you may see this error that provides guidance on how to deploy a virtual

environment suitable for use with pip:

This system-wide Python installation is managed by the Void Linux package

manager, XBPS. Installation of Python packages from other sources is not

normally allowed.

To install a Python package not offered by Void Linux, consider using a virtual

environment, e.g.:

python3 -m venv /path/to/venv

/path/to/venv/pip install <package>

Appending the flag --system-site-packages to the first command will give the

virtual environment access to any Python package installed via XBPS.

Invoking python, pip, and executables installed by pip in /path/to/venv/bin

should automatically use the virtual environment. Alternatively, source its

activation script to add the environment to the command search path for a shell:

. /path/to/venv/activate

After activation, running

deactivate

will remove the environment from the search path without destroying it.

The XBPS package python3-pipx provides pipx, a convenient tool to automatically

manage virtual environments for individual Python applications.

You can read more about this change on Python’s website in PEP 668.

To simplify the use of Void-based containers, all Void container images

tagged 20231003R1 or later will explicitly ignore the “externally managed”

marker. Containers based on these images will still be able to use pip to

install Python packages in the container-wide environment.

Here Be Dragons

If you really want to be able to install packages with pip in the system- or user-wide Python environment, there are several options, but beware: this can cause hard-to-debug issues with Python applications, or issues when updating with XBPS.

- Use pip’s

--break-system-packagesflag. This only applies to the current invocation. - Set pip’s configuration:

pip3 config set install.break-system-packages True. This will apply to all future invocations. - Add a

noextract=/usr/lib/python*/EXTERNALLY-MANAGEDrule to your XBPS configuration and re-install thepython3package. This will apply to all future invocations.

August 30, 2023

Changes to Container Images

To simplify the container experience, we’ve revamped the way Void’s OCI container images are built and tagged.

In short:

- Architectures are now encoded using the platform in the manifest instead of in tags

- Different libcs and flavors of images are now separate images instead of tags

- The

miniflavor is no longer built, as they did not work as intended

You can check out the available images on the Download page or on Github.

If you’re interested in the technical details, you can take a look at the pull request for these changes.

To migrate your current containers:

| Old Image | New Image | Notes |

|---|---|---|

voidlinux/voidlinux |

ghcr.io/void-linux/void-glibc |

Wow, you’ve been using two-year-old images! |

voidlinux/voidlinux-musl |

ghcr.io/void-linux/void-musl |

|

ghcr.io/void-linux/void-linux:*-full-* |

ghcr.io/void-linux/void-glibc-full |

|

ghcr.io/void-linux/void-linux:*-full-*-musl |

ghcr.io/void-linux/void-musl-full |

|

ghcr.io/void-linux/void-linux:*-thin-* |

ghcr.io/void-linux/void-glibc |

|

ghcr.io/void-linux/void-linux:*-thin-*-musl |

ghcr.io/void-linux/void-musl |

|

ghcr.io/void-linux/void-linux:*-mini-* |

ghcr.io/void-linux/void-glibc |

mini images are no longer built |

ghcr.io/void-linux/void-linux:*-mini-*-musl |

ghcr.io/void-linux/void-musl |

|

ghcr.io/void-linux/void-linux:*-thin-bb-* |

ghcr.io/void-linux/void-glibc-busybox |

|

ghcr.io/void-linux/void-linux:*-thin-bb-*-musl |

ghcr.io/void-linux/void-musl-busybox |

|

ghcr.io/void-linux/void-linux:*-mini-bb-* |

ghcr.io/void-linux/void-glibc-busybox |

mini images are no longer built |

ghcr.io/void-linux/void-linux:*-mini-bb-*-musl |

ghcr.io/void-linux/void-musl-busybox |

July 21, 2023

Infrastructure Week - Day 5: Making Distributed Infrastructure Work for Distributed Teams

Void runs a distributed team of maintainers and contributors. Making infrastructure work for any team is a confluence of goals, user experience choices, and hard requirements. Making infrastructure work for a distributed team adds on the complexity of accessing everything securely over the open internet, and doing so in a way that is still convenient and easy to setup. After all, a light switch is difficult to use is likely to lead to lights being left on.

We take several design criteria into mind when designing new systems and services that make Void work. We also periodically re-evaluate systems that have been built to ensure that they still follow good design practices in a way that we are able to maintain, and that does what we want. Lets dive in to some of these design practices.

No Maintainer Facing VPNs

VPNs, or Virtual Private Networks are ways of interconnecting systems such that the network in between appears to vanish beneath a layer of abstraction. WireGuard, OpenVPN, and IPSec are examples of VPNs. OpenVPN and IPSec, a client program handles encryption and decryption of traffic on a tunnel or tap device that translates packets into and out of the kernel network stack. If you work in a field that involves using a computer for your job, your employer may make use of a VPN to grant your device connectivity to their corporate network environment without you having to be physically present in a building. VPN technologies can also be used to make multiple physical sites appear to all be on the same network.

Void uses WireGuard to provide machine-to-machine connectivity for our fleet, but only within our fleet. Maintainers always access services without a VPN. Why do we do this, and how do we do it? First the why. We operate in this way because corporate VPNs are often cumbersome, require split horizon DNS (where you get different DNS answers depending on where you resolve from) and require careful planning to make sure no subnet overlap occurs between the VPN, the network you are connecting to, and your local network. If there were an overlap, the kernel would be unable to determine where to send the packets since it has multiple routes for the same subnets. There are cases where this is a valid network topology (ECMP), but that is not what is being discussed here. We also have no reason to use a VPN. Most of the use cases that still require a VPN have to do with transporting arbitrary TCP streams across a network, but this is unnecessary. For Void, all our services are either HTTP based or are transported over SSH.

For almost all our systems that we interact with daily, either a web interface or HTTP-based API is provided. For the devspace file hosting system, maintainers can use SFTP via SSH. Both HTTP and SSH have robust, extremely well tested authentication and encryption options. When designing a system for secure access, defense in depth is important, but so is trust that the cryptographic primitives you have selected actually work. We trust that HTTPS works, and so there is no need to wrap the connection in an additional layer of encryption. The same goes for SSH, which we use exclusively public-key authentication for. This choice is sometimes challenging to maintain, since it means that we need to ensure highly available HTTP proxies and secure, easily maintained SSH key implementations, we have found it works well for us. In addition to the static files that all our tier 1 mirrors serve, the mirrors are additionally capable of acting as proxies. This allows us to terminate the externally trusted TLS session at a webserver running nginx, and then pass the traffic over our internal encrypted fabric to the destination service.

For SSH we simply make use of AuthorizedKeysCommand to summon keys

from NetAuth allowing authorized maintainers to log onto servers or

ssh-enabled services wherever their keys are validated. For the

devspace service which has a broader ACL than our base hardware, we

can enhance its separation by running an SFTP server distinct from the

host sshd. This allows us to ensure that it is impossible for a key

validated for devspace to inadvertently authorize a shell login to the

underlying host.

For all other services, we make use of the service level authentication as and when required. We use combinations of Native NetAuth, LDAP proxies, and PAM helpers to make all access seamless for maintainers via our single sign on system. Removing the barrier of a VPN also means that during an outage, there’s one less component we need to troubleshoot and debug, and one less place for systems to break.

Use of Composable Systems

Distributed systems are often made up of complex, interdependent sub-assemblies. This level of complexity is fine for dedicated teams who are paid to maintain systems day in and day out, but is difficult to pull off with an all-volunteer team that works on Void in their free time. Distributed systems are also best understood on a whiteboard, and this doesn’t lend itself well to making a change on a laptop from a train, or reviewing a delta from a tablet between other tasks. While substantive changes are almost always made from a full terminal, the ratio of substantive changes to items requiring only quick verification is significant, and its important to maintain a level of understand-ability.

In order to maintain the level of understand-ability of the infrastructure at a level that permits a reasonable time investment, we make use of composable systems. Composable systems can best be thought of as infrastructure built out of common sub-assemblies. Think Lego blocks for servers. This allows us to have a common base library of components, for example webservers, synchronization primitives, and timers, and then build these into complex systems through joining their functionality together.

We primarily use containers to achieve this composeability. Each container performs a single task or a well defined sub-process in a larger workflow. For example we can look at the workflow required to serve https://man.voidlinux.org/. In this workflow, a task runs periodically to extract all man pages from all packages, then another process runs to copy those files to the mirrors, and finally a process runs to produce an HTTP response to a given man page request. Notice there that its an HTTP response, but the man site is served securely over HTTPS. This is because across all of our web-based services we make use of common infrastructure such as load balancers and our internal network. This allows applications to focus on their individual functions without needing to think about the complexity of serving an encrypted connection to the outside world.

By designing our systems this way, we also gain another neat feature: local testing. Since applications can be broken down into smaller building blocks, we can take just the single building block under scrutiny and run it locally. Likewise, we can upgrade individual components of the system to determine if they improve or worsen a problem. With some clever configuration, we can even upgrade half of a system that’s highly available and compare the old and new implementations side by side to see if we like one over the other. This composability enables us to configure complex systems as individual, understandable components.

Its worth clarifying though that this is not necessarily a microservices architecture. We don’t really have any services that could be defined as microservices in the conventional sense. Instead this architecture should be thought of as the Unix Philosophy as applied to infrastructure components. Each component has a single well understood goal and that’s all it does. Other goals are accomplished by other services.

We assemble all our various composed services into the service suite that Void provides via our orchestration system (Nomad) and our load balancers (nginx) which allow us to present the various disparate systems as though they were one to the outside world, while still maintaining them as separate service “verticals” side by side each other internally.

Everything in Git

Void’s packages repo is a large git repo with hundreds of contributors and many maintainers. This package bazaar contains all manner of different software that is updated, verified, and accepted by a team that spans the globe. Our infrastructure is no different, but involves fewer people. We make use of two key systems to enable our Infrastructure as Code (IaC) approach.

The first of these tools is Ansible. Ansible is a configuration management utility written in python which can programatically SSH into machines, template files, install and remove packages and more. Ansible takes its instructions as collections of YAML files called roles that are assembled into playbooks (composeability!). These roles come from either the main void-infrastructure repo, or as individual modules from the void-ansible-roles organization on GitHub. Since this is code checked into Git, we can use ansible-lint to ensure that the code is consistent and lint-free. We can then review the changes as a diff, and work on various features on branches just like changes to void-packages. The ability to review what changed is also a powerful debugging tool to allow us to see if a configuration delta led to or resolved a problem, and if we’ve encountered any similar kind of change in the past.

The second tool we use regularly is Terraform. Whereas Ansible configures servers, Terraform configures services. We can apply Terraform to almost any service that has an API as most popular services that Void consumes have terraform providers. We use Terraform to manage our policy files that are loaded into Nomad, Consul and Vault, we use it to provision and deprovision machines on DigitalOcean, Google and AWS, and we use it to update our DNS records as services change. Just like Ansible, Terraform has a linter, a robust module system for code re-use, and a really convenient system for producing a diff between what the files say the service should be doing and what it actually is doing.

Perhaps the most important use of Terraform for us is the formalized onboarding and offboarding process for maintainers. When a new maintainer is proposed and has been accepted through discussion within the Void team, we’ll privately reach out to them to ask if they want to join the project. Given that a candidate accepts the offer to join the group of pkg-committers, the action that formally brings them on to the team is a patch applied to the Terraform that manages our GitHub organization and its members. We can then log approvals, welcome the new contributor to our team with suitable emoji, and grant access all in one convenient place.

Infrastructure as Code allows our distributed team to easily maintain our complex systems with a written record that we can refer back to. The ability to defer changes to an asynchronous review is imperative to manage the workflows of a distributed team.

Good Lines of Communication

Of course, all the infrastructure in the world doesn’t help if the people using it can’t effectively communicate. To make sure this issue doesn’t occur for Void, we have multiple forms of communication with different features. For real-time discussions and even some slower ones, we make use of IRC on Libera.chat. Though many communities appear to be moving away from synchronous text, we find that it works well for us. IRC is a great protocol that allows each member of the team to connect using the interface that they believe is the best for them, as well as to allow our automated systems to connect in as well.

For conversations that need more time or are generally going to be longer we make use of email or a group-scoped discussion on GitHub. This allows for threaded messaging and a topic that can persist for days or weeks if needed. Maintaining a long running thread can help us tease apart complicated issues or ensure everyone’s voice is heard. Long time users of Void may remember our forum, which has since been supplanted by a subreddit and most recently GitHub Discussions. These threaded message boards are also examples of places that we converse and exchange status information, but in a more social context.

For discussion that needs to pertain directly to our infrastructure, we open tickets against the infrastructure repo. This provides an extremely clear place to report issues, discuss fixes, and collate information relating to ongoing work. It also allows us to leverage GitHub’s commit message parsing to automatically resolve a discussion thread once a fix has been applied by closing the issue. For really large changes, we can also use GitHub projects, though in recent years we have not made use of this particular organization system for issues (we use tags).

No matter where we converse though, its always important to make sure we converse clearly and concisely. Void’s team speaks a variety of languages, though we mostly converse in English which is not known for its intuitive clarity. When making hazardous changes, we often push changes to a central location and ask for explicit review of dangerous parts, and call out clearly what the concerns are and what requires review. In this way we ensure that all of Void’s various services stay up, and our team members stay informed.

This post was authored by maldridge who runs most of the day to day

operations of the Void fleet. On behalf of the entire Void team, I

hope you have enjoyed this week’s dive into the infrastructure that

makes Void happen, and have learned some new things. We’re always

working to improve systems and make them easier to maintain or provide

more useful features, so if you want to contribute, join us in IRC.

Feel free to ask questions about this post or any of our others this

week on GitHub

Discussions

or in IRC.

July 20, 2023

Infrastructure Week - Day 4: Downtime Both Planned and Not

Downtime Both Planned and Not

Yesterday we looked at what Void does to monitor the various services and systems that provide all our services, and how we can be alerted when issues occur. When we’re alerted, this means that whatever’s gone wrong needs to be handled by a human, but not always. Sometimes an alert can trip if we have systems down for planned maintenance activities. During these windows, we intentionally take down services in order to repair, replace, or upgrade components so that we don’t have unexpected breakage later.

Planned Downtime

When possible, we always prefer for services to go down during a planned maintenance window. This allows for services to come down cleanly and for people involved to have planned for the time investment to effect changes to the system. We take planned downtime when its not possible to make a change to a system with it up, or when it would be unsafe to do so. Examples of planned downtime include kernel upgrades, major version changes of container runtimes, and major package upgrades.

When we plan for an interruption, the relevant people will agree on a date usually at least a week in the future and will talk about what the impacts will be. Based on these conversations the team will then decide whether or not to post a blog post or notification to social media that an interruption is coming. Most of the changes we do don’t warrant this, but some changes will interrupt services in either an unintuitive way or for an extended period of time. Usually just rebooting a mirror server doesn’t warrant a notification, but suspending the sync to one for a few days would.

Unplanned Downtime

Unplanned downtime is usually much more exciting because it is by definition unexpected. These events happen when something breaks. By and large the most common way that things break for Void is running out of space on disk. This happens because while disk drives are cheap, getting a drive that can survive years powered on with high read/write load is still not a straightforward ask. Especially not a straightforward problem if you want high performance throughput with low latency. The build servers need large volumes of scratch space while building certain packages due to the need to maintain large caches or lots of object files prior to linking. These large elastic use cases mean that we can have hundreds of gigabytes of free space and then over the course of a single build run out of space.

When this happens, we have to log on to a box and look at where we can reclaim some space and possibly dispatch builds back through the system one architecture at a time to ensure they use low enough space requirements to complete. We also have to make sure that when we clean space, we’re not cleaning files that will be immediately redownloaded. One of the easiest places to claim space back from, after all, is the cache of downloaded files. The primary point of complication in this workflow can be getting a build to restart. Sometimes we have builds that get submitted in specific orders and when a crash occurs in the middle we may need to re-queue the builds to ensure dependencies get built in the right order.

Sometimes downtime occurs due to network partitions. Void runs in many datacenters around the globe, and incidents ranging from street repaving to literal ship anchors can disrupt the fiber optic cables connecting our various network sites together. When this happens, we can often arrive upon a state where people can see both sides of the split, but our machines can’t see each other anymore. Sometimes we’re able to fix this by manually reloading routes or cycling tunnels between machines, but often times its easier for us to just drain services from an affected location and wait out the issue using our remaining capacity elsewhere.

Lessening the Effects of Downtime

As was alluded to with network partitions, we take a lot of steps to mitigate downtime and the effects of unplanned incidents. A large part of this effort goes into making as much content as possible static so that it can be served from minimal infrastructure, usually nothing more than an nginx instance. This is how the docs, infrastructure docs, main website, and a number of services like xlocate work. There’s a batch task that runs to refresh the information, it gets copied to multiple servers, and then as long as at least one of those servers remains up the service remains up.

Mirrors of course are highly available by being byte-for-byte copies of each other. Since the mirrors are static files, they’re easy to make available redundantly. We also configure all mirrors to be able to serve under any name, so during an extended outage, the DNS entry for a given name can be changed and the traffic serviced by another mirror. This allows us to present the illusion that the mirrors don’t go down when we perform longer maintenance at the cost of some complexity in the DNS layer. The mirrors don’t just host static content though. We also serve the https://man.voidlinux.org site from the mirrors which involves a CGI executable and a collection of static man pages to be available. The nginx frontends on each mirror are configured to first seek out their local services, but if those are unavailable they will reach across Void’s private network to find an instance of the service that is up.

This private network is a mesh of wireguard tunnels that span all our different machines and different providers. You can think of it like a multi-cloud VPC which enables us to ignore a lot of the complexity that would otherwise manifest when operating in a multi-cloud design pattern. The private network also allows us to use distributed service instances while still fronting them through relatively few points. This improves security because very few people and places need access to the certificates for voidlinux.org, as opposed to the certificates having to be present on every machine.

For services that are containerized, we have an additional set of tricks available that can let us lessen the effects of a downed server. As long as the task in question doesn’t require access to specific disks or data that are not available elsewhere, Nomad can reschedule the task to some other machine and update its entry in our internal service catalog so that other services know where to find it. This allows us to move things like our IRC bots and some parts of our mirror control infrastructure around when hosts are unavailable, rather than those services having to be unavailable for the duration of a host level outage. If we know that the downtime is coming in advance, we can actually instruct Nomad to smoothly remove services from the specific machine in question and relocate those services somewhere else. When the relocation is handled as a specific event rather than as the result of a machine going away, the service interruption is measured in seconds.

Design Choices and Trade-offs

Of course there is no free lunch, and these choices come with trade-offs. Some of the design choices we’ve made have to do with the difference in effort required to test a service locally and debug it remotely. Containers help a lot with this process since its possible to run the exact same image with the exact same code in it as what is running in the production instance. This also lets us insulate Void’s infrastructure from any possible breakage caused by a bad update, since each service is encapsulated and resistant to bad updates. We simply review each service’s behavior as they are updated individually and this results in a clean migration path from one version to another without any question of if it will work or not. If we do discover a problem, the infrastructure is checked into git and the old versions of the containers are retained, so we can easily roll back.

We leverage the containers to make the workflows easier to debug in the general case, but of course the complexity doesn’t go away. Its important to understand that container orchestrators don’t remove complexity, quite to the contrary they increase it. What they do is shift and concentrate the complexity from one group of people (application developers) to another (infrastructure teams). This shift allows for fewer people to need to have to care about the specifics of running applications or deploying servers, since they truly can say “well it works on my machine” and be reasonably confident that the same container will work when deployed on the fleet.

The last major trade-off that we make when deciding where to run something is thinking about how hard it will be to move later if we decide we’re unhappy with the provider. Void is actually currently in the process of migrating our email server from one host to another at the time of writing due to IP reputation issues at our previous hosting provider. In order to make it easier to perform the migration, we deployed the mail server originally as a container via Nomad, which means that standing up the new mail server is as easy as moving the DNS entries and telling Nomad that the old mail server should be drained of workload.

Our infrastructure only works as well as the software running on it, but we do spend a lot of time making sure that the experience of developing and deploying that software is as easy as possible.

This has been day four of Void’s infrastructure week. Tomorrow we’ll

wrap up the series with a look at how we make distributed

infrastructure work for our distributed team. This post was authored

by maldridge who runs most of the day to day operations of the Void

fleet. Feel free to ask questions on GitHub

Discussions

or in IRC.

July 19, 2023

Infrastructure Week - Day 3: Are We Down?

Are We Down?

So far we’ve looked at a relatively sizable fleet of machines scattered across a number of different providers, technologies, and management styles. We’ve then looked at the myriad of services that were running on top of the fleet and the tools used to deploy and maintain those services. At its heart, Void is a large distributed system with many parts working in concert to provide the set of features that end users and maintainers engage with.

Like any machine, Void’s infrastructure has wear items, parts that require replacement, and components that break unexpectedly. When this happens we need to identify the problem, determine the cause, formulate a plan to return to service, and execute a set of workflows to either permanently resolve the issue, or temporarily bypass a problem to buy time while we work on a more permanent fix.

Lets go through the different systems and services that allow us to work out what’s gone wrong, or what’s still going right. We can broadly divide these systems into two kinds of monitoring solutions. In the first category we have logs. Logs are easy to understand conceptually because they exist all around us on every system. Metrics are a bit more abstract, and usually measure specific quantifiable qualities of a system or service. Void makes use of both Logs and Metrics to determine how the fleet is operating.

Metrics

Metrics quantify some part of a system. You can think of metrics as a wall of gauges and charts that measure how a system works, similarly to the dashboard of a car that provides information about the speed of the vehicle, the rotational speed of the engine, and the coolant temperature and fuel levels. In Void’s case, metrics refers to quantities like available disk space, number of requests per minute to a webserver, time spent processing a mirror sync and other similar items.

We collect these metrics to a central point on a dedicated machine using Prometheus, which is a widely adopted metrics monitoring system. Prometheus “scrapes” all our various sources of metrics by downloading data from them over HTTP, parsing it, and adding it to a time-series database. From this database we can then query for how a metric has changed over time in addition to whatever its current value is. This is on the surface not that interesting, but it turns out to be extremely useful since it allows checking how a value has changed over time. Humans turn out to be really good at pattern recognition, but machines are still better and we can have Prometheus predict trend lines, compute rates and compare them, and line up a bunch of different metrics onto the same graph so we can compare what different values were reading at the same time.

The metrics that Prometheus fetches come from programs that are collectively referred to as exporters. These exporters export the status information of the system they integrate with. Lets look at the individual exporters that Void uses and some examples of the metrics they provide.

Node Exporter

Perhaps the most widely deployed exporter, the node_exporter

provides information about nodes. In this case a node is a server

somewhere, and the exporter provides a lot of general information

about how the server is performing. Since it is a generic exporter,

we get many many metrics out of it, not all of which apply to the Void

fleet.

Some of the metrics that are exported include the status of the disk,

memory, cpu and network, as well as more specialized information such

as the number of context switches and various kernel level values from

/proc.

SSL Exporter

The SSL Exporter provides information about the various TLS certificates in use across the fleet. It does this by probing the remote services to retrieve the certificate and then extract values from it. Having these values allows us to alert on certificates that are expiring soon and have failed to renew, as well as to ensure that the target sites are reachable at all.

Compiler Cache Exporter

Void’s build farm makes use of ccache to speed up rebuilds when a

build needs to be stopped and restarted. This is rarely useful

because software has already had a test build by the time it makes it

to our systems. However for large packages such as chromium, Firefox,

and boost where a failure can occur due to an out of space condition

or memory exhaustion. Having the compiler cache statistics allows us

to determine if we’re efficiently using the cache.

Repository Exporter

The repository exporter is custom software that runs in two different configurations for Void. In the first configuration it checks our internal sync workflows and repository status. The metrics that are reported include the last time a given repository was updated, how long it took to copy from its origin builder to the shadow mirror, and whether or not the repository is currently staging changes or if the data is fully consistent. This status information allows maintainers to quickly and easily check whether a long running build has fully flushed through the system and the repositories are in steady state. It also provides a convenient way for us to catch problems with stuck rsync jobs where the rsync service may have become hung mid-copy.

In the second deployment the repo exporter looks not at Void’s repos, but all of the mirrors. The information gathered in this case is whether the remote repo is still synchronizing with the current repodata or not, and how far behind the origin the remote repo is. The exporter can also work out how long a given mirror takes to sync if the remote mirror has configured timer files in their sync workflow, which can help us to alert a mirror sponsor to an issue at their end.

Logs

Logs in Void’s infrastructure are conceptually not unlike the files on

disk in /var/log on a Void system. We have two primary systems that

store and retrieve logs within our fleet.

Build Logs

The build system produces copious amounts of log output that we need to retain effectively forever to be able to look back on if a problem occurs in a more recent version of a package and we want to know if the problem has always been present. Because of this, we use buildbot’s built in log storage to store a large volume of logs on disk with locality to the build servers. These build logs aren’t searchable, nor are they structured. Its just the output of the build workflow and xbps-src’s status messages written to disk.

Service Logs

Service logs are a bit more interesting, since these are logs that come from the broad collection of tasks that run on Nomad and may be themselves entirely ephemeral. The sync processes are a good example of this workflow where the process only exists as long as the copy runs, and then the task goes away, but we still need a way to determine if any faults occurred. To achieve this result, we stream the logs to Loki.

Loki is a complex distributed log processing system which we run in all-in-one mode to reduce its operational overhead. The practical benefit of Loki is that it handles the full text searching and label indexing of our structure log data. Structured logs simply refers to the idea that the logs are more than just raw text, but have some organizational hierarchy such as tags, JSON data, or a similar kind of metadata that enables fast and efficient cataloging of text data.

Using this Data

Just collecting metrics and logs is one thing, actually using it to draw meaningful conclusions about the fleet and what its doing is another. We want to be able to visualize the data, but we also don’t want to have to constantly be watching graphs to determine when something is wrong. We use different systems to access the data depending on whether a human or a machine is going to watch it.

For human access, we make use of Grafana to display nice graphs and dashboards. You can actually view all our public dashboards at https://grafana.voidlinux.org where you can see the mirror status, the builder status, and various other at-a-glance views of our systems. We use grafana to quickly explore the data and query logs when diagnosing a fault because its extremely optimized for this use case. We also are able to edit dashboards on the fly to produce new views of data which can help explain or visualize a fault.

For machines, we need some other way to observe the data. This kind of workflow, where we want the machine to observe the data and raise an alarm or alert if something is wrong is actually built in to Prometheus. We just load a collection of alerting rules which tell Prometheus what to look for in the pile of data at its disposal.

These rules look for things like predictions that the amount of free disk space will reach zero within 4 hours, the system load being too high for too long, or a machine thrashing too many context switches. Since these rules use the same query language that humans use to interactively explore the data, it allows for one-off graphs to quickly become alerts if we decide an issue that is intermittent is something we should keep an eye on long term. These alerts then raise conditions that a human needs to validate and potentially respond to, but that isn’t something Prometheus does.

Fortunately for managing alerts, we can simply deploy the Prometheus Alertmanager, and this is what we do. This dedicated software takes care of receiving, deduplicating and grouping, and then forwarding alerts to other systems to actually do the summoning of a human to do something about the alert. In larger organizations, an alertmanager configuration would also route different alerts to different teams of people. Since Void is a relatively small organization, we just need the general pool of people who can do something to be made aware. There are lots of ways to do this, but the easiest is to just send the alerts to IRC.

This involves an IRC bot, and fortunately Google already had one publicly available we could run. The alertrelay bot connects to IRC on one end and alertmanager on the other and passes alerts to an IRC channel where all the maintainers are. We can’t acknowledge the alerts from IRC, but most of the time we’re just generally keeping an eye on things and making sure no part of the fleet crashes in a way that automatic recovery doesn’t work.

Monitoring for Void - Altogether

Between metrics and logs we can paint a complete picture of what’s going on anywhere in the fleet and the status of key systems. Whether its a performance question or an outage in progress, the tools at our disposal allow us to introspect systems without having to log in directly to any particular system.

This has been day three of Void’s infrastructure week. Check back

tomorrow to learn about what we do when things go wrong, and how we

recover from failure scenarios. This post was authored by maldridge

who runs most of the day to day operations of the Void fleet. Feel

free to ask questions on GitHub

Discussions

or in IRC.